«DeepSeek is more humane, my mother told me in May. Doctors are more like machines.» There’s a bitter irony in this, obviously, but it’s real and it’s happening faster than we’re ready to cope. People are finding connection (and empathy and patience) in AI that they are missing in interactions with other humans – in this case: doctors.

From Weekly Filet #541, in September 2025.

🔗

What happens in a world where all reliable ways of validating truth are gone? When every text, image, video, sound recording can be fake, and everything that’s real can easily be discredited by calling it fake? One thing is guaranteed: We’re about to find out.

From Weekly Filet #541, in September 2025.

🔗

Did you know chief decision scientist is a role at Google? Me neither. Cassie Kozyrkov used to have that title and she has so many interesting things to say in this interview. I found the first part most interesting where she talks about what makes a good decision, and why even helpful answers from AI can lead to worse decisions.

From Weekly Filet #538, in August 2025.

🔗

An opinionated rating of the five most commonly used AI models in search of the most ethical one. Short answer: «There are no ethical options — only harm reduction strategies.» Still, there are notable differences and you probably don’t want to choose the ones that represent «environmental racism in action» or «industrial-scale exploitation».

From Weekly Filet #537, in July 2025.

🔗

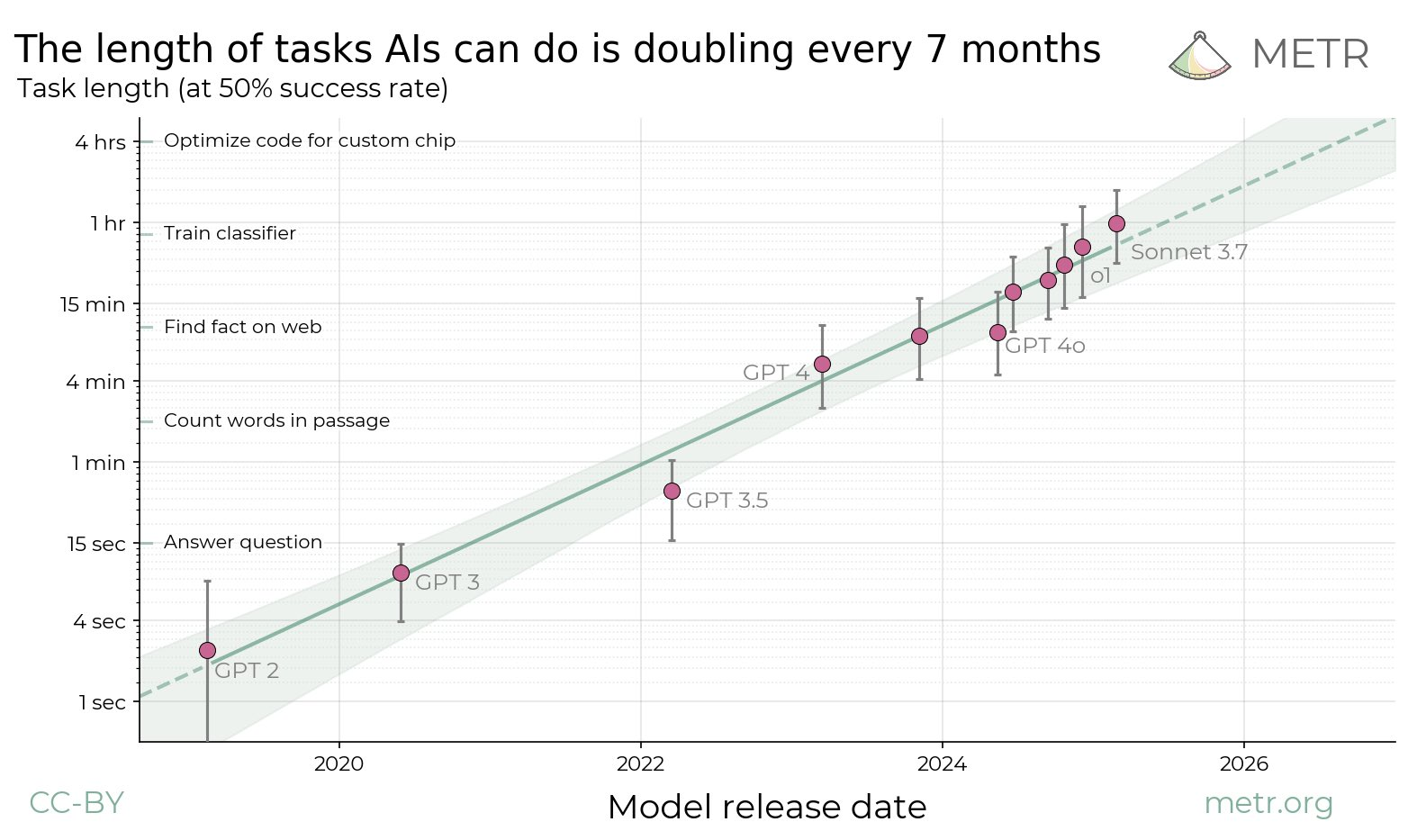

Unlike the title might suggest, this isn’t a forceful argument that we will reach artificial general intelligence within five years. Instead, it’s a nuanced examination of the factors that influence how artificial intelligence will evolve. And why it’s likely that we will either get to AGI over the next couple of years, or see a significant slowdown in progress afterwards. This made me pause: «Today’s situation feels like February 2020 just before COVID lockdowns: a clear trend suggested imminent, massive change, yet most people continued their lives as normal.»

From Weekly Filet #523, in April 2025.

🔗

While the web is getting polluted with AI-generated garbage, the race towards artificial general intelligence is real. The Biden administration’s AI advisor believes AI could exceed all human cognitive capabilities within the next 2-3 years. Which means: during Trump’s presidency. Obviously, other experts disagree with this timeline, but even if it’s unlikely yet possible, it’s a scenario to take very seriously.

From Weekly Filet #521, in March 2025.

🔗

This is a fascinating challenge: To test when – if ever, but yes, more likely: when – artificial intelligence surpasses human intelligence, scientists are designing a super-hard exam, called Humanity’s Last Exam. 3000 multiple-choice and short answer questions, with areas ranging from hummingbird anatomy to rocket engineering.

From Weekly Filet #515, in February 2025.

🔗

The binary distinction between two camps who view artificial intelligence very differently is obviously oversimplifying things, but Casey Newton does make some good observations here, especially his main point: If we want to avoid AI going really, really badly, then skeptics must stop «staring at the floor of AI’s current abilities … and accept that AI is already more capable and more embedded in our systems than they currently admit.»

From Weekly Filet #508, in December 2024.

🔗

This is a spectacular piece. Dario Amodei, the founder of Anthropic, lays out his vision of what a world with powerful AI might look like if everything goes right. Obviously, you would expect someone working on these models to be very optimistic, and there’s good reason to assume that not everything will go right. But still, I like how clearly he describes what the best-case scenario would look like. It makes everything tangible – and disputable. Here’s one key takeaway: «After powerful AI is developed, we will in a few years make all the progress in biology and medicine that we would have made in the whole 21st century.» What is powerful AI? Smarter than a Nobel Prize winner across most relevant fields. And able to directly interact with the world in all the ways humans can. And when will we have it? Could be as soon as 2026. Just imagine…

From Weekly Filet #501, in October 2024.

🔗

Thresholds are a good way of making sense of technological change. These moments when a technology makes a leap from barely useable to surprisingly good. AI has, undeniably, passed lots of thresholds in the past year. But here’s the catch: Unlike with other technologies, it is hard to measure when an AI crosses a threshold. Here’s where the idea of the «impossibility list» comes into play…

From Weekly Filet #497, in July 2024.

🔗

Make sense of what’s happening, and imagine what could be.

Carefully curated recommendations for curious minds who love when something makes them go «Huh, I never thought of it this way!».

Undecided? Learn more | Peek inside